Adversarial Multi-task Learning for Text Classification

by Pengfei Liu, Xipeng Qiu, Xuanjing Huang

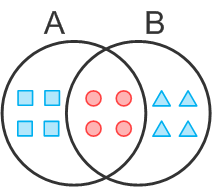

Fig. Two sharing schemes for task A and task B. The overlap between two black circles denotes shared space. The blue triangles and boxes represent the task-specific features while the red circles denote the features which can be shared.

Abstract

Neural network models have shown their promising opportunities for multi-task learning, which focus on learning the shared layers to extract the common and task-invariant features. However, in most existing approaches, the extracted shared features are prone to be contaminated by task-specific features or the noise brought by other tasks. In this paper, we propose an adversarial multi-task learning framework, alleviating the shared and private latent feature spaces from interfering with each other. We conduct extensive experiments on 16 different text classification tasks, which demonstrates the benefits of our approach. Besides, we show that the shared knowledge learned by our proposed model can be regarded as off-the-shelf knowledge and easily transferred to new tasks.

Paper

Code

Dataset

- The datasets of all 16 tasks are publicly available HERE.

Bibtex

- Please cite the following paper when you use the data set or code:@article{liu2017adversarial,

title={Adversarial Multi-task Learning for Text Classification},

author={Liu, Pengfei and Qiu, Xipeng and Huang, Xuanjing},

journal={arXiv preprint arXiv:1704.05742},

year={2017}

}