Contextualized non-local neural networks for sequence learning

Pengfei Liu, Shuaicheng Chang, Jian Tang, Jackie Chi Kit Cheung

Fig. What would happen if GNNs meet Transformer ?

Contributions

- We propose to utilize the concept locality bias to analyze existing neural sequence models.

- We analyze two challenges for sequence learning (structure of sentences and contextualized word representations) from the perspective of models’ locality biases, which encourages us to find the complementarity between Transformer and graph neural networks

- We draw on the complementary strengths of Transformer and GNNs, proposing a contextualized non-local neural network

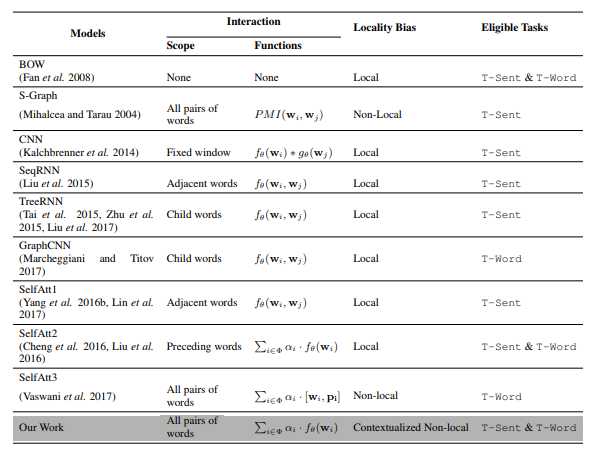

Summary of Neural Sequence Models

Fig. : A comparison of published approaches for sentence representations.